Training with BABE: The Bias Annotations By Experts Dataset for Automatic Media Bias Detection

28. April 2024

Media Bias 101: A Very Short Introduction to the Taxonomy

3. February 2025

We developed a questionnaire to assess readers’ perceptions of media bias

through research, reduction, and validation.

10 min read || View Original Paper || Media Bias Questionnaire

Main Takeaways:

- We developed a 48-question questionnaire to measure how readers perceive media bias, which can be used in media studies and annotation processes.

- We semantically grouped the questions and identified the most reliable ones for media bias studies and training automated detection tools:

- Political Ideology Group: Raters showed strong agreement, making it a consistent and accurate factor for identifying media bias.

- Bias and Factuality Group: These closely linked factors reveal political leanings and reliability, offering a comprehensive way to assess media bias through automated classifiers.

- Our questionnaire provides a robust framework that researchers can use to build better media bias detection models and improve data consistency across studies.

Have you ever read a news article and felt it was pushing a particular agenda but couldn’t pinpoint why? Or wondered if other readers have also noticed the same bias or are unaware of how it’s skewed the public discussion in support of certain ideologies?

Or, on a more personal note, trying to argue with your elderly parents that certain news articles they’ve read and deeply believed in are biased or perpetuating certain toxic stereotypes but haven’t got enough evidence to support your arguments? ;D

Indeed, people’s interpretations of social issues and how they’re portrayed can differ greatly, shaped by their unique experiences and values. So when we encounter suspiciously biased media content, how do we know if it’s just us or the biased content?

If you’ve had these thoughts, you’re not alone. People’s interpretation of media bias is complex to understand, and even researchers struggle to find a consistent way to measure it.

So, what makes measuring media bias so challenging?

So, how can we measure the perception of media bias reliably?

The paper we discuss today systematically develops a questionnaire to capture how news consumers perceive media bias in news content.

For example, you can use this questionnaire to test how well your friends, family, or significant others can recognize bias in a given news article—maybe in general or after explaining the concept of media bias to them. Perhaps you’ll find the results surprising–how differently readers with different cultural backgrounds perceive the same content and, therefore, understand others’ values and stances more.

You can even develop a super interesting competition to find out who has the most neutral, unbiased view regarding the topic under discussion—in cases where you argue with others that can’t be settled ;D.

In research, our questionnaire can be integrated into the media bias annotation process to improve the quality of datasets. Additionally, it can enhance comparability across media bias studies (if, of course, applied in other studies), improve data collection methods, and ultimately boost the performance of automatic models for media bias detection.

A Quick Peek Into Our Questionnaire

Our questionnaire covers various aspects and nuances of media bias while keeping the final set testable (aka, the questionnaire is not too long!). It consists of 48 questions (or sometimes we call them “items”):

- 25 with varying answering formats

– For example, “How did the article handle ‘the issue’?”, rated on a scale from -10 (Too easy) to 10 (Too tough). - 6 focused on feelings ratings

– For example: “The article makes me feel amused/angry/anxious/disgusted/ resentful/sympathetic,” rated from -10 (strongly disagree) to 10 (strongly agree). - 17 using semantic differentials

– For example, “How would you describe the article: biased or balanced?”, where users select one of the two options.

- 25 with varying answering formats

Wait a minute, what are Semantic Differentials?

A semantic differential is a method to measure people’s subjective opinions or attitudes about something by presenting pairs of opposite words.

For example, if you’re rating a product, you might ask: “Do you find the product more ‘easy to use’ or ‘hard to use’?” The respondent would choose a position between “easy to use” and “hard to use,” reflecting their feelings.

In all, it’s a way to capture the differences in people’s opinions using pairs of contrasting ideas.

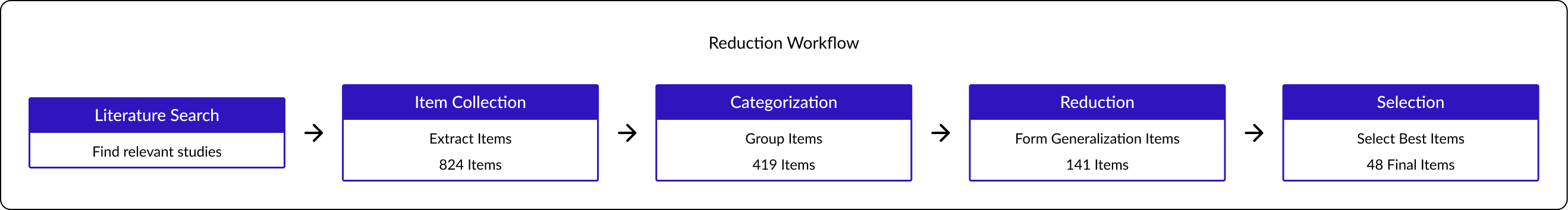

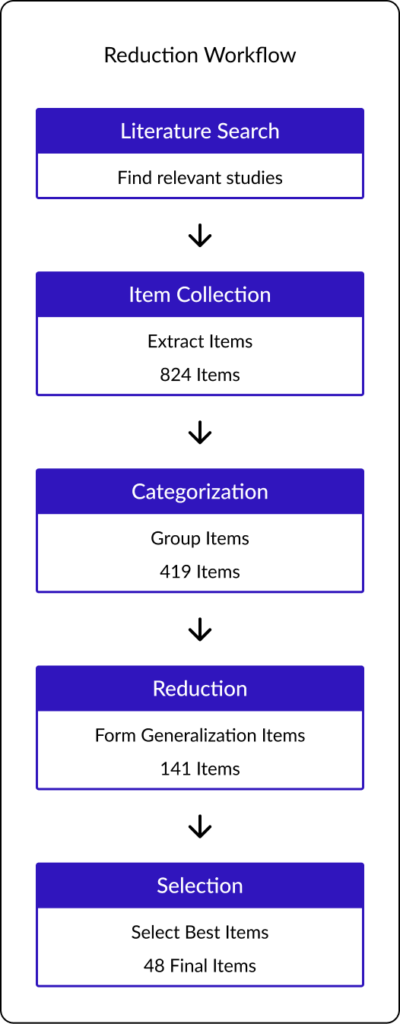

(1) Literature Search → (2) Reduction Process → (3) Item Validation.

1. The Journey of Developing Our Questionnaire

We started with a literature search and identified 405 relevant studies, narrowed them down to 74, and reduced the questions to 48 key items. To refine and validate the questionnaire, we tested it on articles with known bias ratings and used Exploratory Factor Analysis (EFA) to identify patterns and streamline the questions.

Okay, and what is Exploratory Factor Analysis (EFA)?

Exploratory Factor Analysis (EFA) is a statistical method used to identify underlying patterns in survey responses and reduce data to a smaller set of grouped variables. It helps uncover latent constructs, or hidden factors, that explain how survey items are related, based on the variance they share.

For instance, imagine you’re asking people about their favorite activities—movies, music, books, and video games. You notice that folks who love action movies also tend to play video games, while bookworms often enjoy classical music.

EFA steps in like a detective, grouping these related likes into categories, such as “action-packed fun” and “quiet-time favorites,” making the data easier to understand.

Stage 1: Literature Search for Relevant Papers

- How did we search for relevant papers?

We conducted an extensive literature search on PsychInfo and Google Scholar to find studies on media bias perception. Using the search term “perception of media bias,” we focused on papers written in English and German. - How did we select relevant papers? (405 → 74 papers)

We manually screened headlines and keywords on both platforms to find studies related to media, media bias, and media perception, which led to 405 potential studies. After reviewing abstracts and full texts, we excluded those without a clear focus on media bias perception or relevant questions, narrowing the selection to 74 studies.

Stage 2: Multi-Iterative Reduction Process

We compiled 824 relevant questions from the 74 selected papers, directly extracting the original wording when available. If not, we reconstructed the questions based on descriptions.

- Stage 2.1 – Categorization

We first categorized the questions into broad themes: Perception of Media Bias, Influence of Media Bias, Political Background, and Demographics. Focusing on the first two categories, we identified 419 items and later revisited the other categories for background information like demographics and political context. - Stage 2.2 – Reduction

The 419 questions were further grouped into five bias measurement categories: Cause, Existence, Direction, Strength, and Influence. We then condensed semantically similar questions to create constructs that fit multiple items without losing essential aspects.

For example, one construct was: “Would you say that the person/content/outlet was strictly neutral, biased against, or in favor of a side?”

After this, we generated 42 constructs and identified 99 general items that didn’t fit into specific constructs.

- Stage 2.3 – Selection

In the final step, we grouped items by content, selecting those that covered all necessary aspects. We prioritized constructs, using general items only when constructs didn’t fully address an aspect. We excluded certain items based on these five criteria:

- Lack of Generalizability

Some items were too specific to their original context. - Limited Use of Semantic Differentials

We only included semantic differentials used by at least two authors. - Difficulty Recovering Original Wording

- Unsuitability for Identifying Bias in Articles

Some questions were too broad, focusing on media outlets rather than specific articles. - Inadaptability to Visual Analog Scale (VAS)

Since we used VAS for the questionnaire, items that couldn’t be adapted were removed.

- Lack of Generalizability

After this stage, the final questionnaire consisted of 25 items with different response formats, including 17 semantic differentials and six ratings of feelings, making up a total of 48 items.

Stage 3: Item Validation With Exploratory Factor Analysis

After crafting the questionnaire, we had to reflect on its effectiveness: Did we choose the right questions? Do they cover all aspects of media bias? Could we streamline them to focus on the most insightful items?

To answer these questions, we conducted a survey with 663 participants who evaluated bias in 190 articles using our 48-question set.

- Survey

In October 2020, participants were randomly assigned one of 190 articles and asked to complete 48 questions on a Visual Analog Scale (VAS) ranging from -10 to 10. Both the order of pages and items was randomized, with attention checks to ensure data quality. - Participants

Out of 827 U.S. participants, 663 remained after excluding unreliable data. The group was 53.5% women, with an average age of 33.86. Their education levels varied, with 35.7% holding bachelor’s degrees, and they averaged 2.95 hours per day consuming news. - Article Selection

We carefully handpicked 190 articles representing a range of political viewpoints and topics, from unbiased reporting to highly biased commentary. Topics included major issues such as the Coronavirus, Gun Control, Abortion, and Immigration. Articles came from AllSides and alternative news outlets, covering a spectrum from very liberal to very conservative. (Curious about our selection of articles? They’re just one click away!)

The survey results helped us evaluate how well the questions measured perceived bias, enabling us to streamline the questionnaire through Exploratory Factor Analysis (EFA).

Exploratory Factor Analysis

Since 48 questions were still too many for a single survey, we used Exploratory Factor Analysis (EFA) to identify the most valuable questions. EFA is a statistical method that helps uncover patterns in a large set of variables, reducing them into a smaller number of summary variables, or factors.

We applied EFA to the 48 questions, grouping them into six key factors. This allowed us to streamline the questionnaire to a final set of 21 questions, divided into the following factors:

- Influence (2 questions)

While measuring an article’s influence, we found low agreement among raters, indicating that perceptions of influence varied widely depending on individual experiences with the content. This suggests that the Influence factor may be less reliable for assessing media bias at the article level due to its subjective nature. - Political Ideology (2 questions)

Raters showed strong agreement on an article’s political ideology, making it one of the more consistent factors for evaluating media bias. The way Political Ideology matched up with external bias ratings suggests it could be helpful for automated tools to identify media bias accurately. - Topic Affirmation (3 questions)

There was also strong agreement on whether an article affirmed a particular topic, though it can sometimes be context-dependent. Despite this, the high level of consistency among raters makes it a valuable tool in certain cases for assessing bias in content. - Bias (6 questions)

The Bias factor, while closely related to Factuality, provided insights into how articles leaned politically. Raters had somewhat less agreement on Bias compared to Political Ideology, but it remains a strong candidate for use in classifiers. Since Bias and Factuality are linked, future research could explore merging these two factors into a single construct. - Factuality (5 questions)

As with Bias, Factuality also correlated with political leanings. This connection suggests that perceptions of bias and factuality are intertwined, offering a comprehensive view of how people interpret the reliability of media content. - Negative Emotion (3 questions)

This factor captured the emotional tone of articles, providing additional context for media bias ratings. Though not directly related to bias, it offers another layer of insight, helping to frame how readers emotionally respond to content.

By organizing the questions into these six factors, we reduced the questionnaire to a more focused and efficient set of questions that retained all essential information. The strong correlations between Political Ideology and external ratings further validated the questionnaire’s accuracy in measuring perceived media bias.

3. And now you can use The Questionnaire!

Click here to jump straight to our questionnaire page! If you’ve been paying close attention, you might have already noticed that nearly every time we’ve mentioned “questionnaire” up to now, it’s been a clickable link that takes you to the same place ;D.

Once you’re on the questionnaire page, you can scroll through all the questions in our final collection. Each question has its own link that provides more details—like the construct it belongs to, the answer format, and even where it came from (its origin). It’s an adventure you can explore at your own pace! Thanks for sticking with us on this journey—now the questionnaire is your oyster ;D.