What do we research and why?

The original idea initiating our research was to develop a system that would identify and present media bias towards any news consumer. We collected some features and trained some classifiers, but shortly after, we noticed that looking at the problem from a purely computer-scientific perspective is never enough.

Therefore, we extend the research area to other disciplines besides Computer Science, mostly Psychology, Linguistics, Economics, and Political Science. For all areas, we build data sets covering various aspects of media bias. We research how media bias is perceived, how it is represented, how it influences our individual and collective decision-making. We also try to understand how such a complex construct can be visualized understandably and how and where readers are interested in knowing about bias in any news outlet. We have also developed a methodology to build a reliable and detailed data set about media bias, which we currently apply to create the biggest data set on the topic. Many complex questions arise from the overall research background, so we are gathering knowledge from expert partners worldwide. In the following, we will give an overview of the questions we answer, but feel free to always contact us, take a look at our team and partners, join us as a partner, student or Ph.D, or see our publications, which, however, are naturally always a little behind the current state of the project due to reviewing processes from journals or conferences. We believe that we will be able to develop a tool that can change the way users consume news.

So far, we cover the following research areas:

Psychology

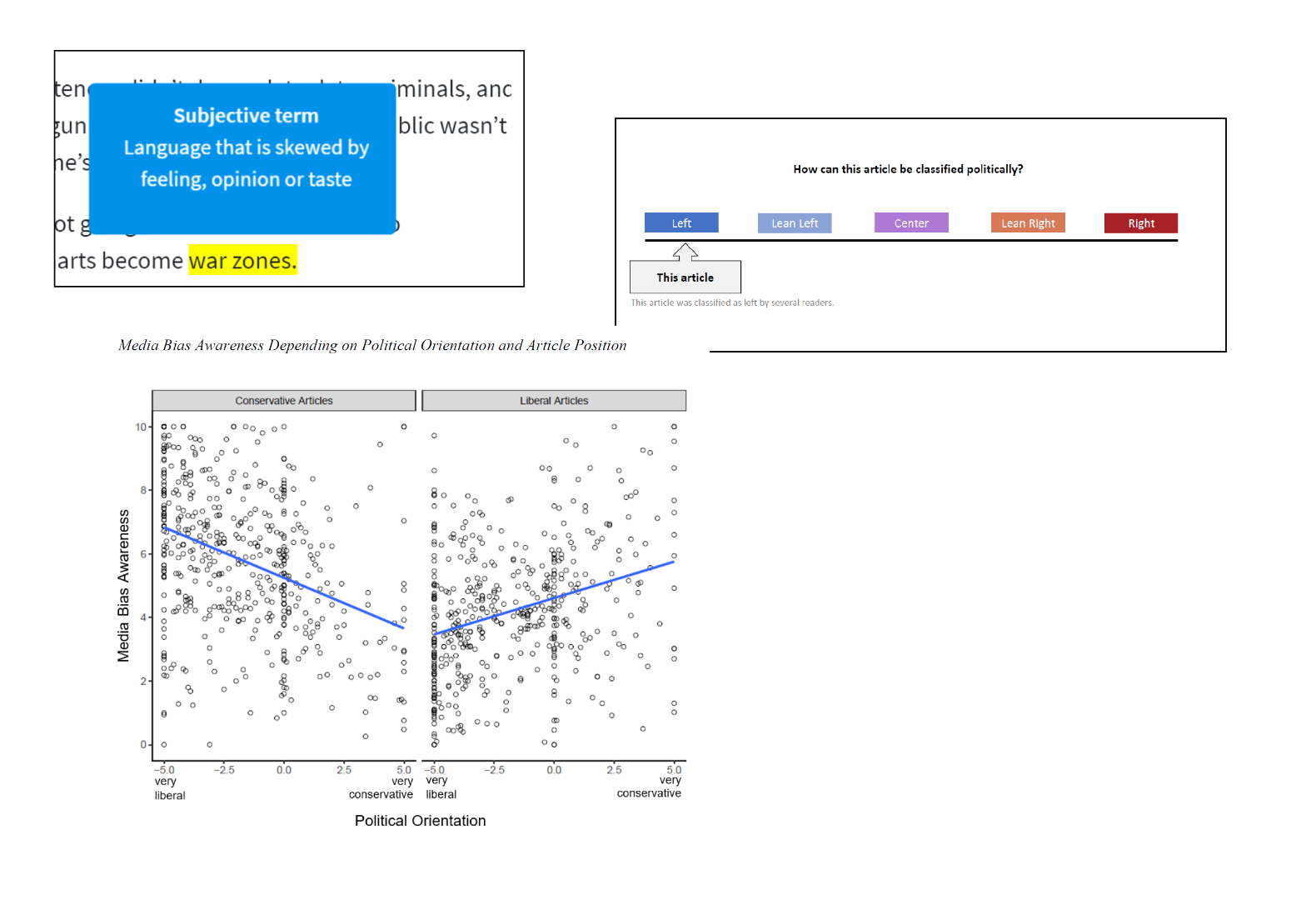

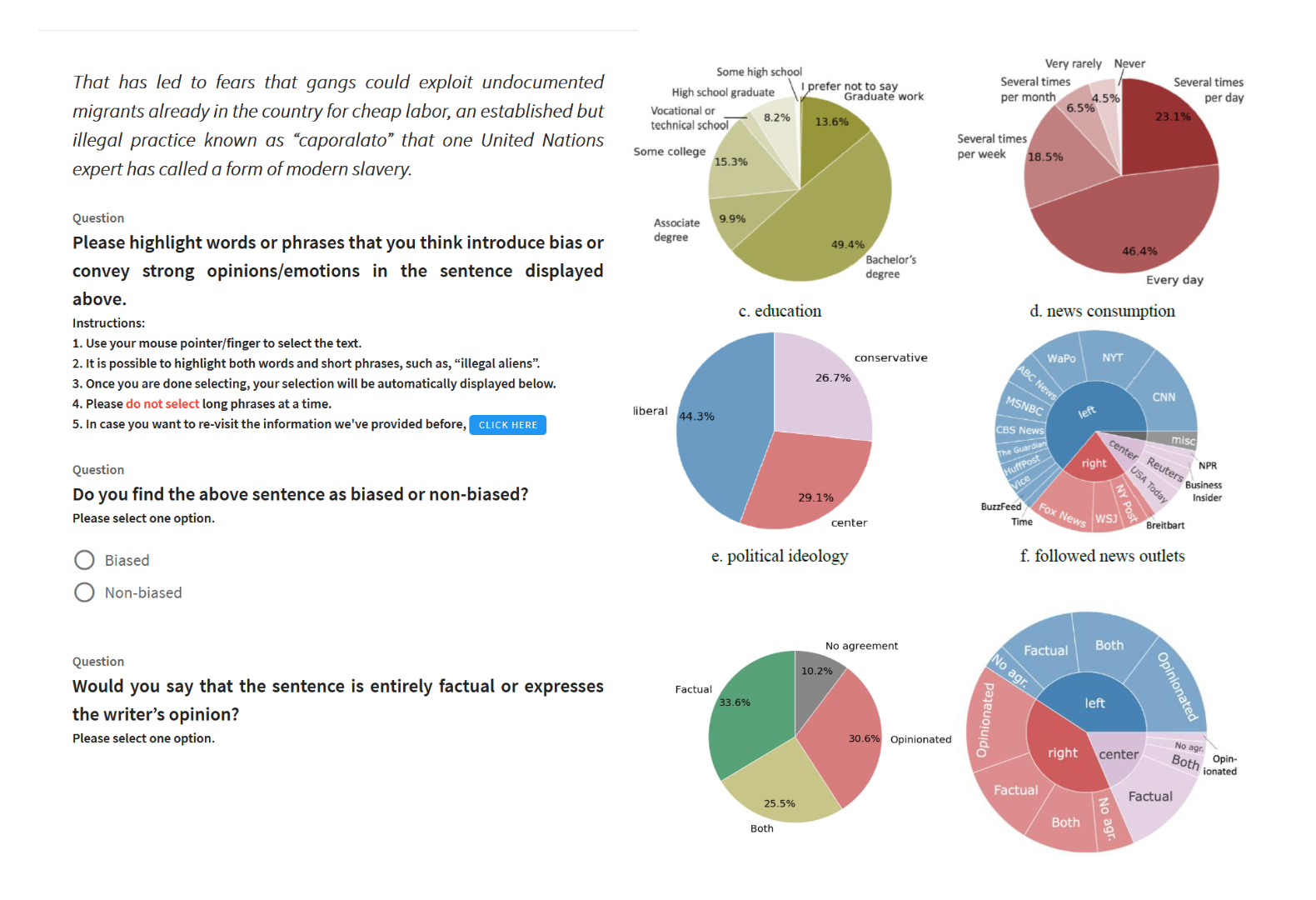

From a psychological perspective, we research how bias is perceived, both in real-world and experimental settings. We research how to visualize media bias and try different types of visualizations (such as a left-right bar, in-text annotations, deeper explanations, or inoculation messages) in diverse online surveys or a self-built browser plugin. We also try to identify what role any kind of personal background plays regarding the interpretation of any text as being biased or not. Lastly, we collected over 800 questions from existing text perception research over the last 50 years, reduced them semantically, and are developing a well-tested questionnaire about the perception of media bias and text in general. Our motivation to do so is that we noticed that the style of the question asked about media bias plays a significant role in the answer, which has not been accounted for in existing research. Our first two major publications regarding these issues have been finished and will be published shortly. In case you need resources in advance, contact us.

Linguistics

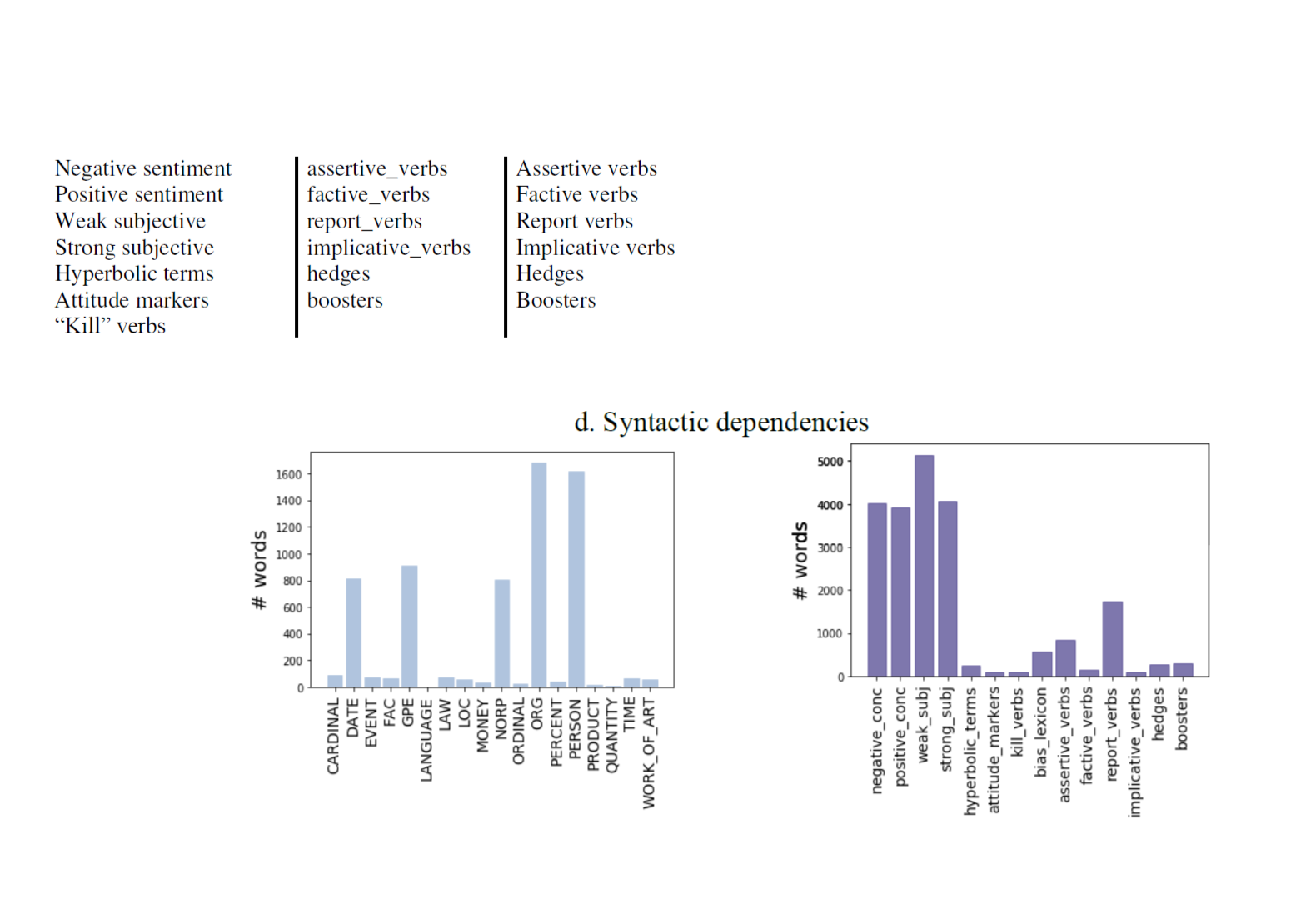

From a linguistics perspective, we try to understand which linguistic features and characteristics play in the perception and identification of media bias. How do news consumers react to rhetorical figures, syntactic elements, or specific words? While not all media bias can be explained by linguistics, there is huge power in the descriptive power that the research area offers. In some cases, a specific linguistic figure or pattern strongly increases the probability of biased perception. From our research areas, linguistics is the youngest, even though much of bias research covers related problems, as shown in one of our journal papers here.

Computer Science

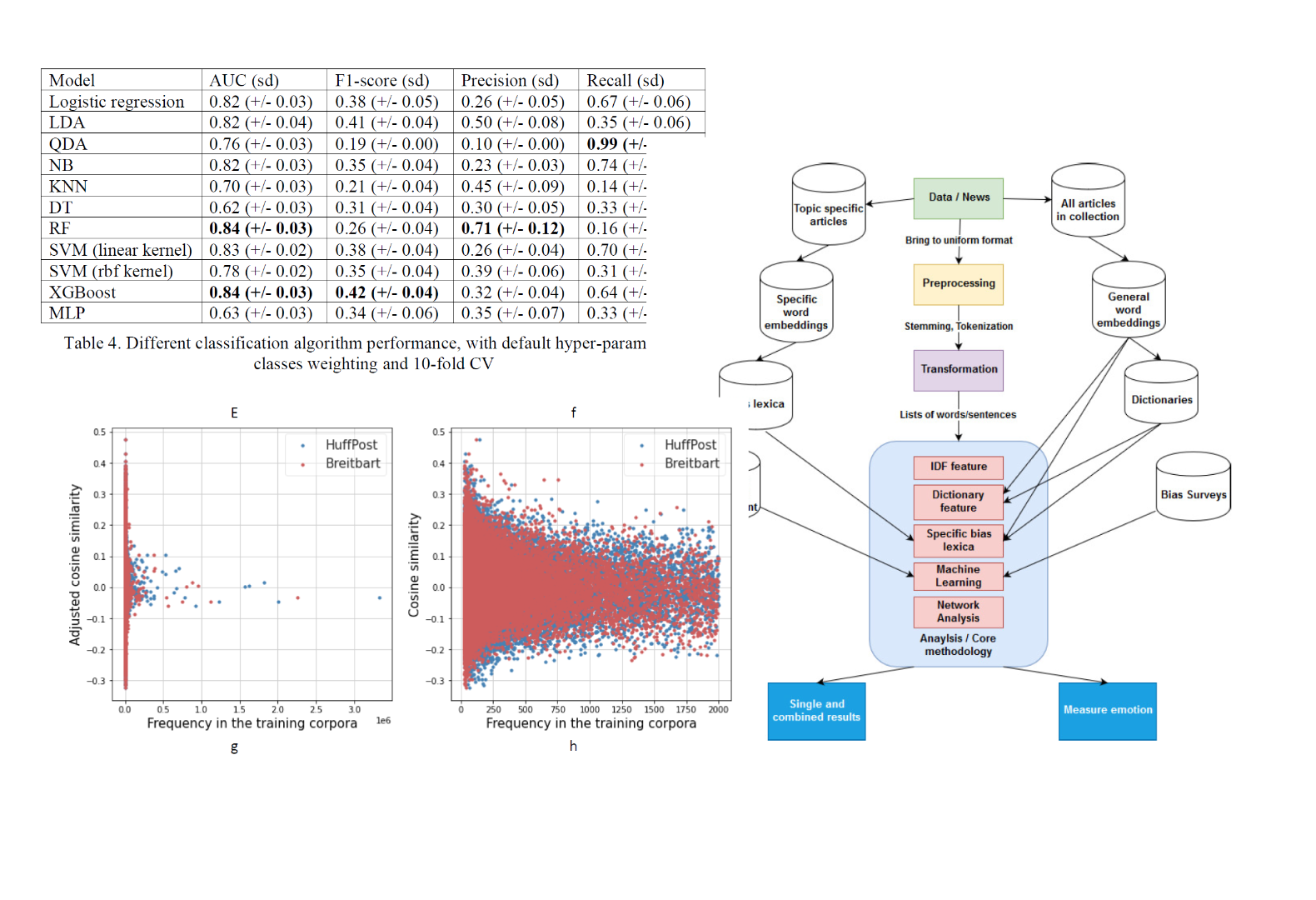

Computer Science is our largest area of interest. We are developing a method to detect media bias reliably automatically. To achieve that goal, we develop new methods to create data sets (see the last section of this list) for our classifiers. We experiment with classic machine learning as well as deep learning, implement multi-task learning for the case of bias detection, and try to normalize text from different sources (such as Twitter or Wikipedia) to a journalistic style so we can distinguish content and style more easily, to build more powerful data sets quicker. We look into how content can explicitly and implicitly be measured and then compared among outlets to answer the question to which extent different meanings and perceptions of the same words in different situations and outlets can be explained. We also built a graph database prototype containing a few hundred million articles from US news companies to apply our classifiers and modern network analysis techniques. To summarize, our main computer scientific research aims are building powerful classification algorithms to detect bias, developing a database and interface that enables any user to efficiently apply methods on any data set, and identifying the exact meaning of context regarding each word.

Economics & Political Science

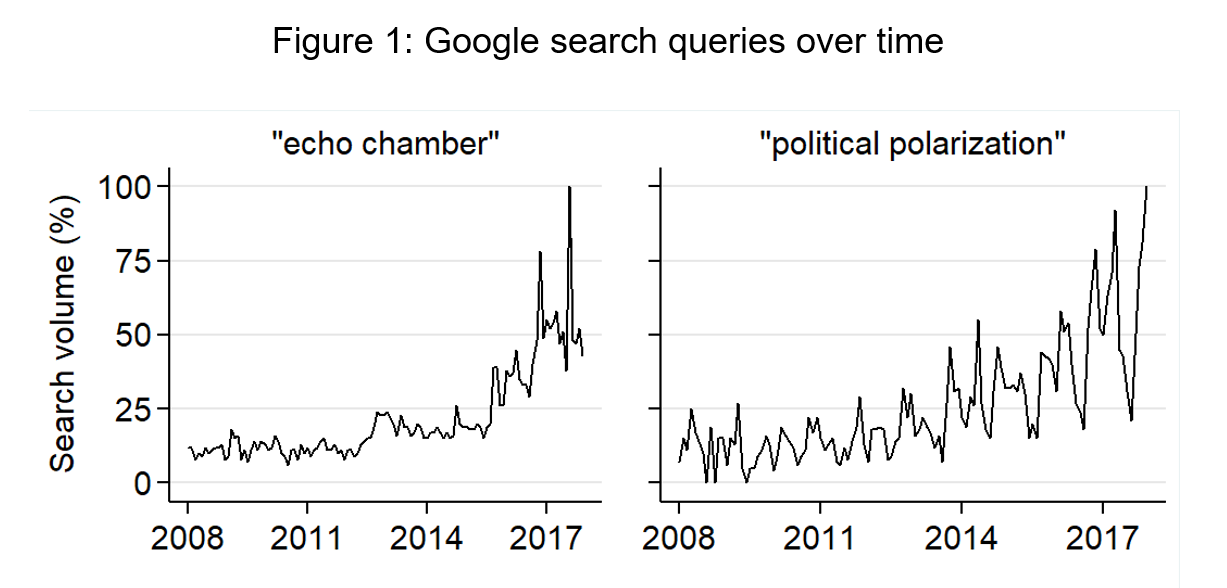

Over the past decade, economists and political scientists have developed a strong interest in media bias. A core question of this strand of research is whether bias is driven by the demand side of the news market or the supply side. Demand-side explanations posit that audiences often prefer to consume content that is compatible with their beliefs, and profit-maximizing media companies can increase their sales if they pander to these beliefs. Supply-side explanations emphasize the role of journalists, editors, and media owners in causing bias. In other cases, bias is a consequence of the influence of political actors and lobbies. Empirical studies in economics and political science aim to disentangle alternative explanations and shed light on the causal arrows. In addition, researchers investigate the consequences of bias, such as effects on voting on belief polarization. For instance, this study uses plausibly exogenous variation in unemployment news to estimate the causal effect of left-digit bias on voting in US gubernatorial elections.

Media bias data set creation

We create different data sets for various aspects of media bias. To do so, we build and use mixed annotation and survey question platforms and we develop guidelines to teach bias annotators to understand the concept in more depth. Also, we are developing a game to teach any player to read the news more critically and to return bias annotations simultaneously. Information on published datasets and models can be found here.